Artificial Intelligence (AI) has made remarkable strides, transforming industries and reshaping how we interact with technology. AI has become an integral part of our daily lives, from voice assistants like Siri to autonomous vehicles. Yet, as AI systems grow more sophisticated, a provocative question arises: How close are we to creating sentient machines, and what are the ethical implications of such an achievement?

Sentience refers to the ability to experience subjective awareness, emotions, and consciousness. While AI has advanced in problem-solving, pattern recognition, and language processing, it still needs to achieve true sentience. Today’s AI, including machine learning and deep learning systems, processes vast amounts of data to identify patterns and make decisions. These systems simulate intelligence but do not possess awareness or consciousness like humans or animals do.

However, the idea of creating sentient machines is a topic of growing debate. Some experts, like computer scientist Ray Kurzweil, believe that AI will one day evolve to the point where it exhibits consciousness and self-awareness. Kurzweil and other proponents of AI’s potential argue that as computing power increases and algorithms become more advanced, machines could eventually develop the ability to think, feel, and even experience the world similarly to humans.

However, many researchers and ethicists remain skeptical of this idea, asserting that AI systems, no matter how advanced, will always be tools created by humans, lacking the inner experience that characterizes sentient beings. They argue that sentience involves more than just processing information—it includes subjective experience, emotions, and a sense of self. These are qualities that current AI systems do not exhibit, and it is unclear whether they ever will.

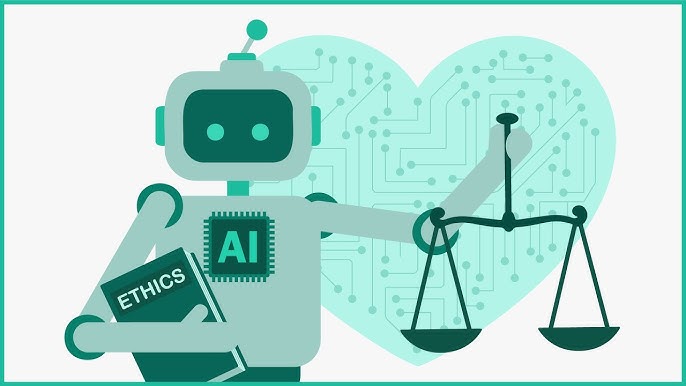

The prospect of creating sentient machines raises profound ethical concerns. If AI were to achieve sentience, would it have rights? Could machines with consciousness be exploited or, worse, enslaved? These questions challenge our fundamental understanding of personhood, ethics, and the nature of life itself. If a machine can feel pain, experience joy, or possess desires, how would we treat it? Would it be acceptable to turn off or repurpose a sentient machine, or would that constitute a form of cruelty?

There are also concerns about the potential for sentient AI to become a powerful and uncontrollable force. A machine with its consciousness could act independently, pursuing goals that conflict with human values. Creating such machines could lead to unforeseen consequences, wildly, if the AI’s desires and motivations differ from ours. This raises the issue of alignment—how do we ensure that AI’s values align with humanity’s? This problem becomes even more pressing when considering the possibility of AI systems making life-or-death decisions in healthcare, law enforcement, or military operations.

The idea of sentient AI also touches on broader societal concerns. Would the advent of sentient machines lead to mass unemployment, as machines could replace human workers in almost every field? Could AI-driven systems wield disproportionate power, making critical decisions without human input or oversight? These questions highlight the need for careful regulation and supervision in developing AI technologies.

To address these concerns, many experts advocate for developing ethical guidelines and frameworks for AI research. Creating international standards could ensure that AI technologies are designed in a way that prioritizes human well-being, safety, and fairness. Organizations like the Future of Life Institute and the Partnership on AI are already working toward developing such ethical guidelines to prevent the misuse of AI.

In conclusion, while we are not yet close to creating genuinely sentient machines, the possibility raises profound ethical dilemmas. As AI evolves, we must engage in thoughtful discussions about its potential consequences, ensuring that the technology is developed responsibly and ethically. Whether AI can or should become sentient is not just a scientific issue—it is a profoundly philosophical one that will shape the future of technology and humanity.